Ontology alignment

Ontology alignment, or ontology matching, is the process of determining correspondences between concepts. A set of correspondences is also called an alignment. The phrase takes on a slightly different meaning, in computer science, cognitive science or philosophy.

Contents |

Computer Science

For computer scientists, concepts are expressed as labels for data. Historically, the need for ontology alignment arose out of the need to integrate hetereogeneous databases, ones developed independently and thus each having their own data vocabulary. In the Semantic Web context involving many actors providing their own ontologies, ontology matching has taken a critical place for helping heterogeneous resources to interoperate. Ontology alignment tools find classes of data that are "semantically equivalent," for example, "Truck" and "Lorry." The classes are not necessarily logically identical. According to Euzenat and Shvaiko (2007)[1], there are three major dimensions for similarity: syntactic, external, and semantic. Coincidentally, they roughly correspond to the dimensions identified by Cognitive Scientists below. A number of tools and frameworks have been developed for aligning ontologies, some with inspiration from Cognitive Science and some independently.

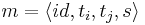

Ontology alignment tools have generally been developed to operate on database schemas[2], XML schemas[3], taxonomies[4], formal languages, entity-relationship models[5], dictionaries, and other label frameworks. They are usually converted to a graph representation before being matched. Since the emergence of the Semantic Web, such graphs can be represented in the Resource Description Framework line of languages by triples of the form <subject, predicate, object>, as illustrated in the Notation 3 syntax. In this context, aligning ontologies is sometimes referred to as "ontology matching".

The problem of Ontology Alignment has been tackled recently by trying to compute matching first and mapping (based on the matching) in an automatic fashion. Systems like DSSim, X-SOM[6] or COMA++ obtained at the moment very high precision and recall[3]. The Ontology Alignment Evaluation Initiative aims to evaluate, compare and improve the different approaches.

More recently, a technique useful to minimize the effort in mapping validation and visualization has been presented which is based on Minimal Mappings. Minimal mappings are high quality mappings such that i) all the other mappings can be computed from them in time linear in the size of the input graphs, and ii) none of them can be dropped without losing property i).

Formal Definition

Given two ontologies  and

and  we can define different type of (inter-ontology) relationships among their terms. Such relationships will be called, all together, alignments and can be categorized among different dimensions:

we can define different type of (inter-ontology) relationships among their terms. Such relationships will be called, all together, alignments and can be categorized among different dimensions:

- similarity vs logic: this is the difference between matchings (predicating about the similarity of ontology terms), and mappings (logical axioms, typically expressing logical equivalence or inclusion among ontology terms)

- atomic vs complex: whether the alignments we considered are one-to-one, or can involve more terms in a query-like formulation (e.g., LAV/GAV mapping)

- homogeneous vs heterogeneous: do the alignments predicate on terms of the same type (e.g., classes are related only to classes, individuals to individuals, etc.) or we allow heterogeneity in the relationship?

- type of alignment: the semantics associated to an alignment. It can be subsumption, equivalence, disjointness, part-of or any user-specify relationship.

Subsumption, atomic, homogeneous alignments are the building blocks to obtain richer alignments, and have a well defined semantics in every Description Logic. Let's now introduce more formally ontology matching and mapping.

An atomic homogeneous matching is an alignment that carries a similarity degree ![s\in [0,1]](/2012-wikipedia_en_all_nopic_01_2012/I/35a8edea94032c30d8fcc7bc7cc9c511.png) , describing the similarity of two terms of the input ontologies

, describing the similarity of two terms of the input ontologies  and

and  . Matching can be both computed, by means heuristic algorithms, or inferred from other matchings.

. Matching can be both computed, by means heuristic algorithms, or inferred from other matchings.

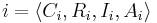

Formally we can say that, a matching is a quadruple  , where

, where  and

and  are homogeneous ontology terms,

are homogeneous ontology terms,  is the similarity degree of

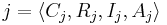

is the similarity degree of  . A (subsumption, homogeneous, atomic) mapping is defined as a pair

. A (subsumption, homogeneous, atomic) mapping is defined as a pair  , where

, where  and

and  are homogeneous ontology terms.

are homogeneous ontology terms.

Cognitive Science

For cognitive scientists interested in ontology alignment, the "concepts" are nodes in a semantic network that reside in brains as "conceptual systems." The focal question is: if everyone has unique experiences and thus different semantic networks, then how can we ever understand each other? This question has been addressed by a model called ABSURDIST (Aligning Between Systems Using Relations Derived Inside Systems for Translation). Three major dimensions have been identified for similarity as equations for "internal similarity, external similarity, and mutual inhibition."[7]

Ontology alignment is closely related to analogy formation, where "concepts" are variables in logic expressions.

Ontology alignment methods

Philosophy

For philosophers, much like cognitive scientists, the interest is in the nature of "understanding." The roots of discourse, however, may be traced to radical interpretation.

Visualization Tools

- ITM Align: semi-automated ontology alignment

- Optima: A Visual Ontology Alignment Tool

- CogZ: Cognitive Support and Visualization for Human-Guided Mapping Systems

- AgreementMaker: Efficient Matching for Large Real-World Schemas and Ontologies

References

- ^ Jérôme Euzenat and Pavel Shvaiko. 2007. Ontology matching, Springer-Verlag, 978-3-540-49611-3.

- ^ J. Berlin and A. Motro. 2002. Database Schema Matching Using Machine Learning with Feature Selection. Proc. of the 14th International Conference on Advanced Information Systems Engineering, pp. 452-466

- ^ a b D. Aumueller, H. Do, S. Massmann, E. Rahm. 2005. Schema and ontology matching with COMA++. Proc. of the 2005 International Conference on Management of Data, pp. 906-908

- ^ S. Ponzetto, R. Navigli. 2009. "Large-Scale Taxonomy Mapping for Restructuring and Integrating Wikipedia". Proc. of the 21st International Joint Conference on Artificial Intelligence (IJCAI 2009), Pasadena, California, pp. 2083-2088.

- ^ A. H. Doan, A. Y. Halevy. Semantic integration research in the database community: A brief survey. AI magazine, 26(1), 2005

- ^ Carlo A. Curino and Giorgio Orsi and Letizia Tanca (2007). "X-SOM: A Flexible Ontology Mapper". International Workshop on Semantic Web Architectures for Enterprises (SWAE'07) in conjunction with the 18th International Conference on Database and Expert Systems Applications (DEXA'07). http://www.polibear.net/blog/wp-content/uploads/2008/01/orsi-xsomflexmap.pdf.

- ^ R. Goldstone and B. Rogosky. 2002. Using relations within conceptual systems to translate across conceptual systems. Cognition 84, pp. 295–320.

See also

- Ontology (computer science)

- Rule Interchange Format (RIF)

- Data conversion

- Semantic Integration

- Semantic matching

- Minimal Mappings

- Interpretation "An interpretation can be the part of a presentation or portrayal of information altered in order to conform to a specific set of symbols."

- Graph isomorphism